Security and Behavioral AI Projects

Our security-focused research explores behavioral biometrics, continuous authentication, and adversarial robustness to strengthen user privacy and trust in modern computing systems. By harnessing motion sensors, touch data, and deep learning, we create lightweight, real-time authentication frameworks that safeguard smartphones even under adversarial conditions. These efforts extend to dynamic ensemble learning and decision-level fusion, enabling adaptive and explainable AI models across high-risk domains like cybersecurity, finance, and mobile platforms.

The growing integration of deep learning (DL) models into high-stakes domains, such as healthcare, finance, and autonomous systems, has made interpretability a cornerstone of trustworthy AI. Interpretable Deep Learning Systems (IDLSes), which combine powerful neural networks with interpretation models, aim to provide transparency into the decision-making process. However, the assumption that interpretation inherently adds security has recently been challenged.

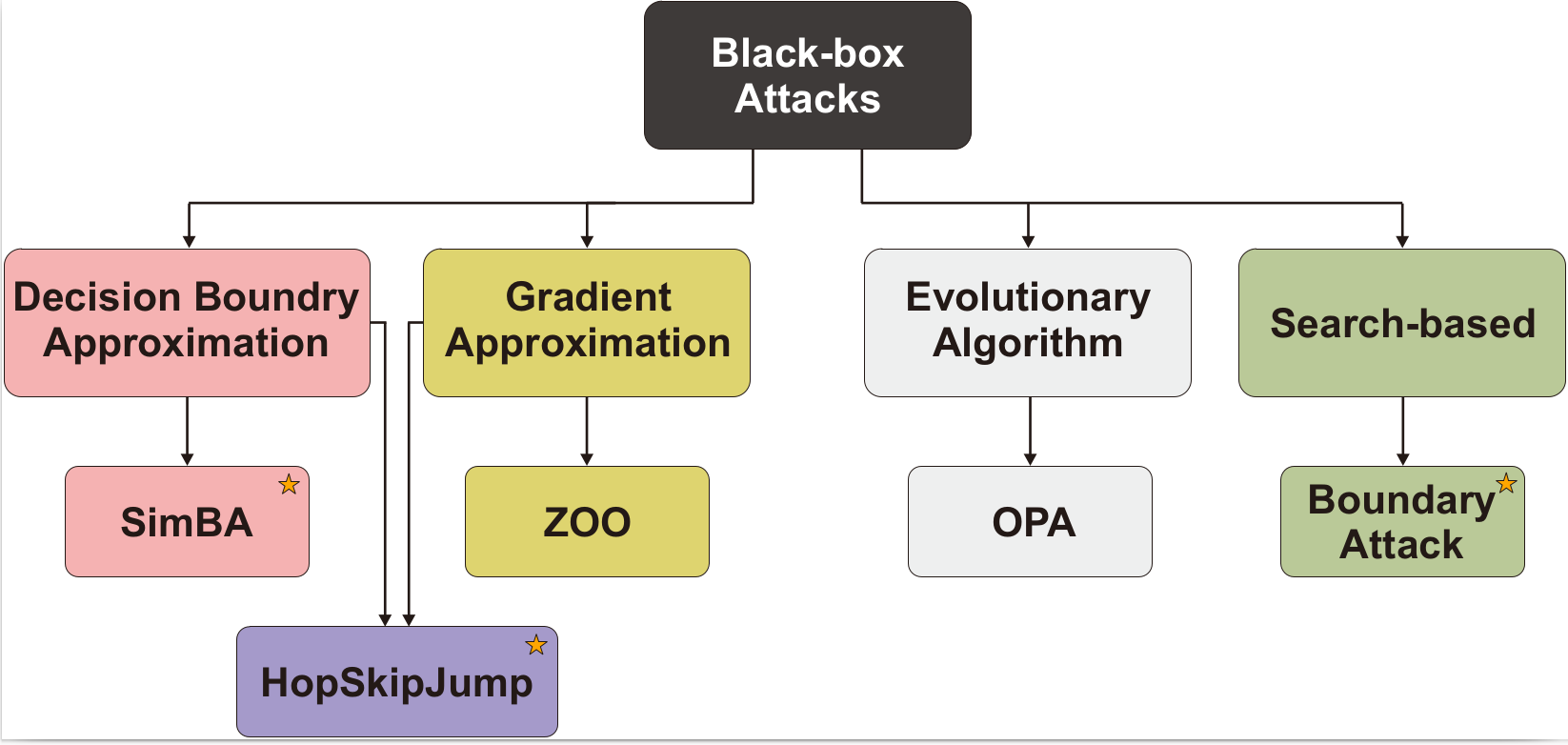

Adversarial attacks pose a serious challenge to the reliability and security of deep learning (DL) models. These attacks, often crafted by introducing imperceptible perturbations to input data, can cause models to make incorrect predictions with high confidence. As a result, understanding and mitigating such threats has become a critical area of research in the field of trustworthy AI. Defenses against adversarial attacks range from input preprocessing and adversarial training to robust model design, yet no single approach has proven universally effective.

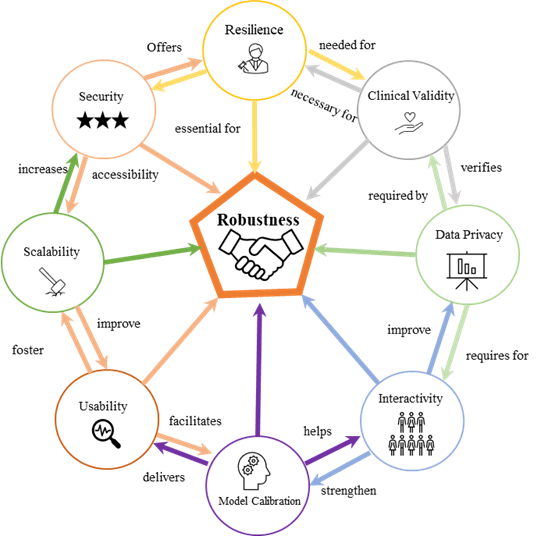

TThe project seeks to address the growing need for transparency, accountability, and interpretability in artificial intelligence (AI) systems used in healthcare. As deep learning and other machine learning techniques become integral to medical diagnostics, prognosis, and treatment planning, the “black-box” nature of many AI models poses significant challenges for clinical adoption, regulatory approval, and patient trust.

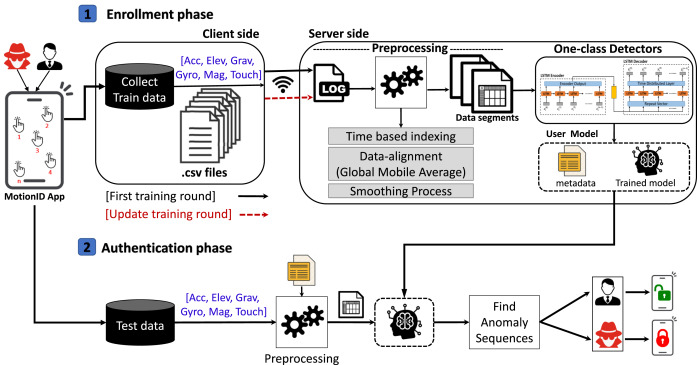

Traditional authentication methods—such as passwords, PINs, and even biometric systems (fingerprint, facial recognition)—typically secure mobile devices only at the point of entry. However, they fail to offer protection throughout a session, leaving devices vulnerable to unauthorized access when unattended. To bridge this security gap, the research group InfoLab at Sungkyunkwan University (SKKU) has led a series of studies on continuous, sensor-based, and adversarially-aware user authentication mechanisms.